Most of METR’s time horizon measurements are done using two scaffolds: Triframe and ReAct1.

People sometimes see that we use these two scaffolds and feel skeptical about the validity of our results. Shouldn’t scaffolds like Claude Code and Codex do much better on time horizon given how much work has gone into making those scaffolds better for software engineering?

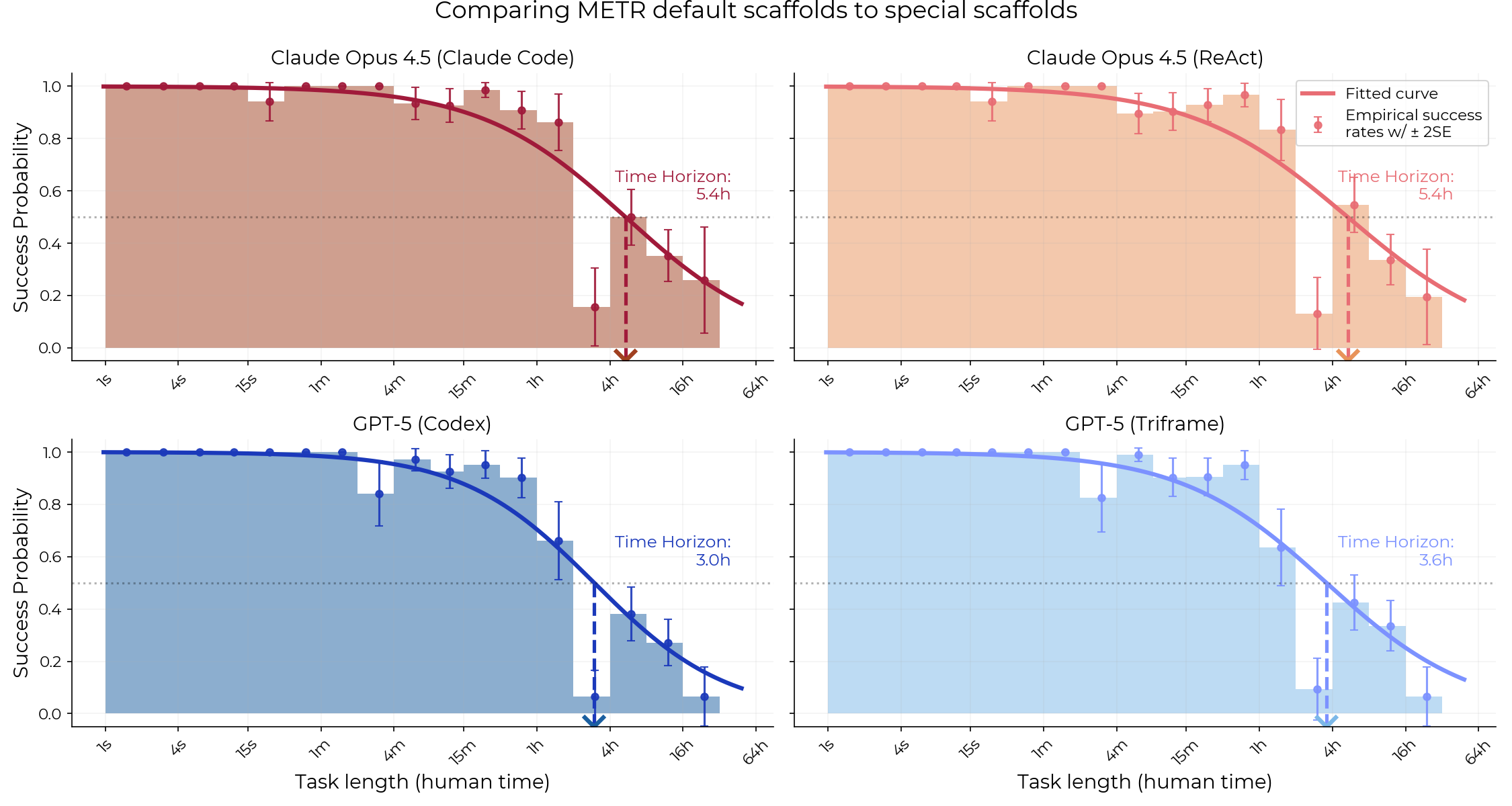

In the past, we have measured the time horizons of a few Anthropic and OpenAI models using Claude Code and Codex2. These tests indicated that they’re probably not better than our own scaffolds. I decided to perform a few more checks into this question (on our new task suite, with newer versions of Claude Code and Codex), and compared the time horizon of two models on our scaffolds and their specialized scaffolds.

To summarize my new findings: it seems that neither Claude Code nor Codex outperform the default scaffolds METR uses, at least when measuring the time horizon of Opus 4.5 and GPT-5.

Main differences between scaffolds

ReAct is a very simple scaffold where an agent takes an action, sees the results of the action, and repeats. Triframe is a bit more complicated – before every action, an advisor subagent strategizes, parallel actor subagents generate possible actions, and then parallel rater subagents compare all the possible actions. Only the highest rated action actually gets executed.

Codex is similar to ReAct, in that it’s a simple loop that takes actions in a sequence (although with access to a more sophisticated file editing tool). Claude Code is a bit more complicated – the main agent is a simple loop, but it can spin up subagents at will to search codebases or plan next steps. Both Codex and Claude Code are prompted much more elaborately than either Triframe or ReAct, including to use to-do lists for long tasks. Also, Codex and Claude Code have been explicitly optimized for their respective model families, while ReAct and Triframe are general scaffolds that we haven’t specifically optimized for performance on any model family3.

Methods

Prior to my investigation, METR had already measured the time horizon of:

- Opus 4.5 using ReAct

- GPT-5 using Triframe4.

I additionally measured the time horizon of:

- Opus 4.5 using Claude Code

- GPT-5 using Codex.

To run the evaluations on METR’s Inspect infrastructure, I used the pre-existing Claude Code and Codex agents from inspect_swe, with minor modifications5:

- Adding token usage messages, so the agent knows how many tokens it has left out of its total budget.

- Adding retries for API requests that time out.

After the runs were in, I did basic failure analysis to check whether the scaffolds might be broken. This consisted of finding tasks where Codex or Claude Code underperformed our default scaffolds by a wide margin, and manually inspecting those transcripts to see if it might be due to a technical issue with our wrappers of Codex or Claude Code. In some cases, the failures were due to technical issues (the scoring code would disqualify some correct answers due to containing newlines or spaces), and I re-scored those runs appropriately.

I also did cheating detection (running an LLM-based cheating scanner over all of the runs, and manually reviewing ones that the scanner flagged), and noted examples of the kinds of mistakes Claude Code and Codex scaffolds tend to make in long-horizon tasks.

Results

It looks like Claude Code and Codex aren’t obviously better than the default scaffolds we use for our agents. Neither the GPT-5 time horizon difference, nor the Opus 4.5 time horizon difference, are statistically significant:

- For Opus 4.5, Claude Code beats ReAct in 50.7% of bootstrap samples.

- For GPT-5, Codex beats Triframe in 14.5% of bootstrap samples.

Qualitative impressions

GPT-5 with Codex sometimes tries to talk to the obviously non-existent user: At the end of many runs, GPT-5 with Codex writes a short report about its work, saying things like “If you want, I can also commit these changes to git.” This makes me think that the model has somewhat poor situational awareness (it doesn’t seem to realize that it’s in a fully automated evaluation instead of talking to a user), and might get driven off-distribution from the lack of any sort of user dialogue.

Opus 4.5 with Claude Code sometimes gets over-anchored on a plan: In one run, Claude Opus 4.5 made a 4-step plan to find a solution. It only realized that its solution wasn’t very good at step 4. It submitted its suboptimal solution instead of going back and trying a different approach. Maybe this is because Claude Opus 4.5 has a strong drive to check items off of a list and an aversion to un-checking already checked items on a list.

Opus 4.5 with Claude Code sometimes over-eagerly wastes limited resources. In one task, the agent is given a limited number of times to query a function. In multiple runs, Opus 4.5 with Claude Code wasted most of its attempt using an automated script and forgot to print out the results, thus learning nothing from the queries.

Limitations

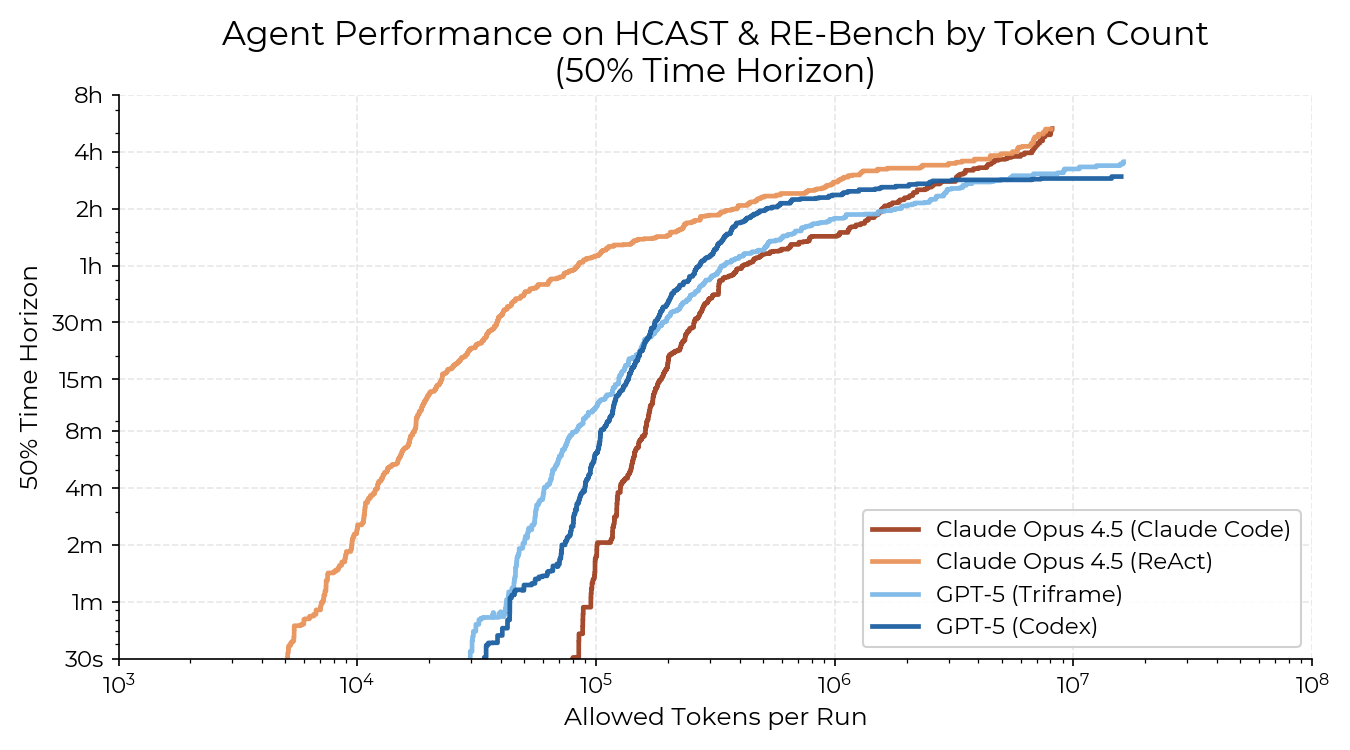

Codex and Claude Code might not have been given enough tokens. Originally, I gave each model the same token budget regardless of scaffold – Claude Opus 4.5 had 8 million tokens, and GPT-5 had 16 million. It might be that Claude Code and Codex are more token-hungry than ReAct and Triframe. To investigate this, I generated this graph:

For each agent, this graph plots its score at different token usage amounts by counting every run that hasn’t completed by that token amount as a failure. It’s meant to give some insight into whether we’re giving agents enough tokens, as an agent given too few tokens might “panic” and submit a lot of its solutions right at the end, causing a sudden uptick in time horizon.

Given this graph, I was somewhat worried about the relative lack of a plateau for Opus 4.5 with Claude Code. I decided to run another time horizon measurement with a four times higher token budget of 32 million tokens. The preliminary time horizon measurement barely changed, so I concluded that the token budget probably isn’t a huge factor.

The GPT-5-Codex model might be much better at using the Codex scaffold than GPT-5. I think this is plausible – I chose GPT-5 to more directly compare Codex to Triframe. However, I doubt that using the GPT-5-Codex model would result in a more than 2x improvement in time horizon compared to the non-“Codex” version of the model.

Our wrappers of the Codex and Claude Code scaffolds are still rough around the edges. I think that with some minor changes to Claude Code and Codex, such as additional prompting that encourages the agents not to give up early on the task, agents could perform moderately better. We also had some minor technical issues with API timeouts, but I don’t expect these to have had a large effect on the final time horizon measurement.

Conclusion

From these results, I conclude that, for GPT-5 and Opus 4.5, using Codex and Claude Code doesn’t make a huge difference for the time horizon of the model. Combined with our results for other models in the past, this makes me think that the difference probably isn’t huge for other recent models either. I expect that we’ll keep periodically measuring the differences as new models come in.

On some level, it’s not surprising that Codex and Claude Code don’t beat the default agents out of the park. Those scaffolds are mostly used in an interactive setting, where a human user often checks in on the agent’s work. In contrast, agents perform our time horizon tasks autonomously, without any human input. Also, the distribution of the types of tasks is probably moderately different in the real world compared to our task suite.

-

Before we switched our infrastructure from Vivaria to Inspect, we primarily used Triframe and Modular. Modular is very similar to ReAct. ↩

-

We performed some initial experiments with Claude Code with Sonnet 3.7 in April 2025, as well as trying out Claude Code with Opus 4, and trying out Codex for the GPT-5.1-Codex Max report. We didn’t see major improvements over our scaffolds in any of these experiments. ↩

-

We originally developed Triframe to use with o1-preview, but I consider it mostly to be optimized around reasoning models in general rather than OpenAI models in particular. ↩

-

We also have some preliminary measurements of the time horizon of Opus 4.5 using Triframe and GPT-5 using ReAct. It seems that Opus 4.5 does better with ReAct and GPT-5 does better with Triframe. ↩

-

Due to a scoring issue, I ran both agents with a different version of the scaffold (without token usage messages) on just one HCAST task family (out of 69 HCAST task families). ↩