METR works with AI developers, governments, and other research organizations who sometimes provide nonpublic model access and proprietary information. Over time, we’ve developed confidentiality and security measures to protect such access and information. This post describes our approach at a high level.

Confidentiality measures

Our confidentiality policy, setup, and norms primarily address the risk of leaks during conversation and in infrastructure, though they also reduce insider threat risk by limiting who knows what.

Policy

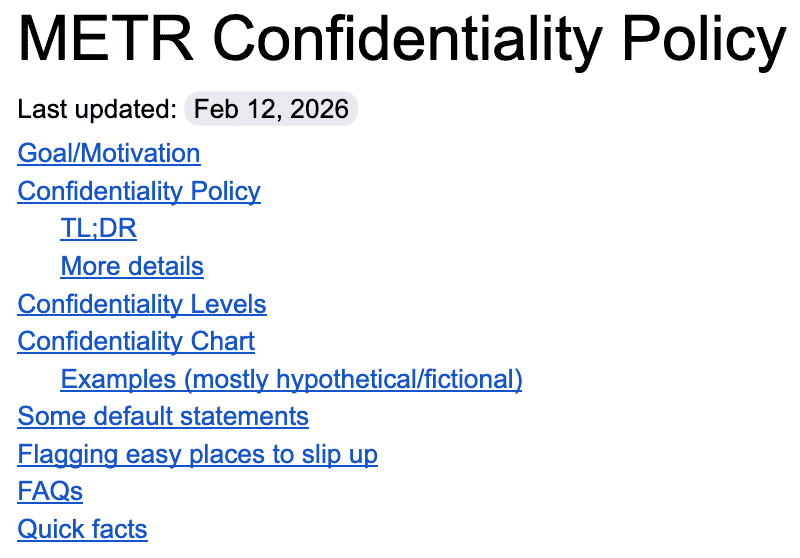

Our confidentiality policy assigns information—including (but not limited to) nonpublic access, lab relationships, policy work, and funding—to our six confidentiality levels, ranging from public to internally siloed, based on sensitivity. At the most restricted end, information about nonpublic models (including capabilities, evaluation timelines, and which developer we’re working with) is limited to researchers directly involved and discussed only by codename. Our own methodology, tasks, and infrastructure are available more broadly within METR, and much of this work is eventually published.

Our policy also provides standard responses for sensitive questions, guidance on edge cases, quick rules of thumb with examples and FAQs, and possible slip-ups to watch out for.

- Don’t comment on labs based on non-public info. Any comments […] should be rigorously substantiated by public information only and caveated as such.

- OK to talk about specific points […], but please do not make overall / blanket statements. e.g.:

- OK to say “[Lab A]’s FSP doesn’t contain X component which we recommend”, […] or “METR found [Lab C’s] model had higher performance on general autonomy tasks than [Lab D’s] model”

- Please avoid saying “[Lab A] has nothing that comes close to this level of capability”, or “No lab currently has anything close to being able to do this task”, except in official communication.

Flagging easy places to slip up:

- Sensitive information in calendar event names (visible on room booking displays)

- Confirming we don't have access to something (which reveals information by exclusion)

- Inadequate soundproofing for sensitive discussions

- Forecasting on public prediction markets about topics we may have inside knowledge of

- [...]

As part of our onboarding process we conduct 1-1 confidentiality training that includes live mock questioning to practice responding to sensitive questions in realistic settings. We also conduct background and reference checks during hiring.

Setup

These six confidentiality levels are used as prefixes across Slack, documents, and other platforms, so confidentiality expectations are visible without relying on memory. Technical controls prevent accidental sharing; for example, documents can’t be shared externally without explicit marking, and channel membership is centrally managed. Even within METR, we do project-specific siloing for sensitive technical and policy projects. For example, to preserve confidentiality around nonpublic model access:

- Only researchers involved in a model’s evaluation can generate and see the model’s completions.

- When a lab gives us access to a non-public model, we generate an animal codename (e.g. “playful-panda”), and all discussion and references to the model within METR use this codename.

- Although our agents are public and our analysis pipeline code is accessible to core team members, for each evaluation we create a secret fork of these repos only accessible by people working on the evaluation.

These steps help prevent inadvertent leaks and limit exposure if our infrastructure were compromised.

Norms

Our confidentiality setup enforces some constraints, but we maintain additional norms to reduce the risk of slips in conversation. For example:

- We use codenames (like “playful-panda”) for all nonpublic model access even in conversations where both parties know the identity, and we default to saying “[playful-panda] lab” rather than using the developer name.

- We actively recognize people for handling confidentiality carefully and encourage staff to flag potential lapses in a dedicated Slack channel.

- We maintain a log of slips and near-misses and do retros for them.

Security measures

The table below summarizes our main security controls that protect against breaches and help limit damage from insider threats. These measures, alongside others, contributed to our SOC 2 Type I certification.

| IAM |

|

| Endpoints |

|

| Monitoring & Response |

|

| Governance & Assurance |

|

For questions about our measures, contact security@metr.org.

The measures described above are accurate as of February 17, 2026 and are subject to change.